Hi there, I’m Alex, and welcome to my Substack.

This is my first article and I’m still figuring out exactly what’s going to make it in here, but if one or more of the following interest you:

Technology

Startups and entrepreneurship

Artificial Intelligence

Emotional Intelligence

Fatherhood and parenting

Self-cultivation and spirituality

Then please do consider subscribing to see (and influence!) how things evolve.

Anyway, onto the post - why decide to train an AI replacement of myself?

The question

A bit of context. I recently quit my job as Head of Engineering at an exciting and likely-to-be-very-successful startup to pursue a different type of lifestyle. Going into detail about what that lifestyle looks like and the reasoning behind it is a topic for a future post, but the upshot is that I’m now operating as a Fractional Chief Technical Officer (CTO)1 with a focus on working with early-stage startups.

I’m pretty fascinated by the developments in AI. But I had found it increasingly difficult to cut through the hype, fear and cynicism surrounding the technology and to get a clear picture in my mind of what the limits and possibilities of it were. I needed to spend some time digging a bit deeper and getting closer to the truth of the matter, or at least improving my understanding.

To achieve this, I wanted to answer a straightforward question:

How likely is it that this technology could make my new career direction fundamentally obsolete, and how quickly might that be the case?2

Spoiler alert: the answer proved to be more nuanced than I anticipated, and taught me as much about myself as it did AI.

The experiment

To answer this question, and train an AI to do my job, I first had to become curious about the problems that early-stage start-up founders would turn to a Fractional CTO with. I have spent the last month speaking with numerous non-technical founders about the challenges they’re facing. I’ve also had enough conversations with non-technical stakeholders in my career to know the kinds of questions and pain points that are common. My guiding philosophy throughout my career is that the most important aspect of any technology is the humans creating it and using it. Could I get ChatGPT to embrace this approach, even in a limited way?

Before we get there, a few words on the practicalities. This was very much an experiment, so I wanted to keep it as cheap and simple as possible. Given I already had a paid OpenAI ChatGPT account, and they offer the ability to ‘train’ your own GPT via a simple, UI with text input, I opted for that. And for obvious reasons, I decided to call it AiLex.

Training AiLex

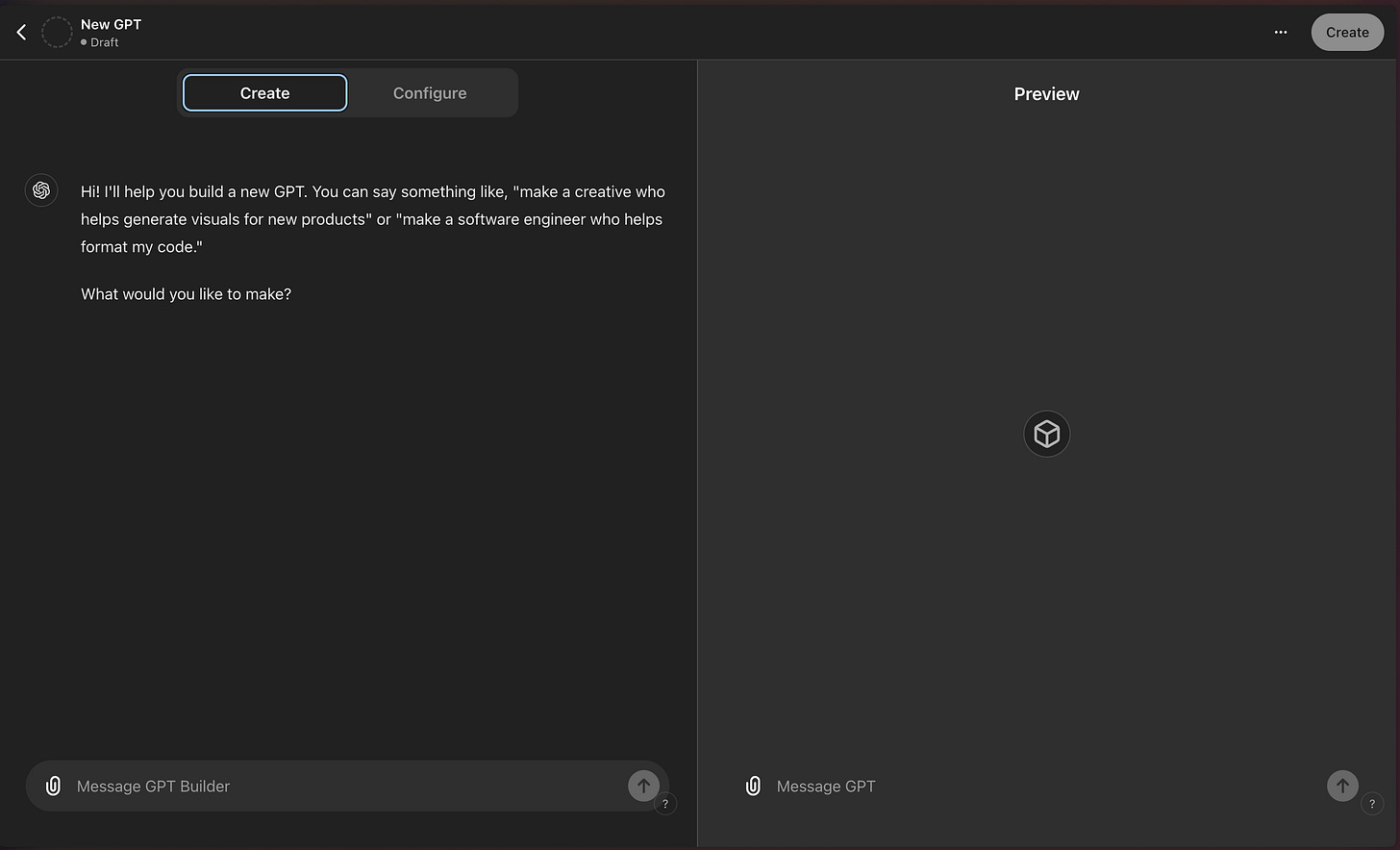

When creating a custom GPT, the first thing you’re presented with is this interface. The screen is divided into 2, with the Create and Configure tabs on the left, and then the Preview of your GPT on the right, allowing you to interact and try it out as you make changes. The Configure page is a basic web form, where you can fill in fields such as Name, Description, etc.

The Create tab, by contrast, was a chat interface like ChatGPT which asked you questions about what you wanted to build.

I was drawn to the Create page because the prospect of defining and configuring my app via a chat felt much more appealing and interesting than just filling in a web form.

I soon realised this was a mistake.

For some things, the Create tab did what I wanted. Being asked what I want to call my custom GPT by a question was indeed ‘nicer’ than simply filling in a text box. But once it came to defining the behaviour I wanted AiLex to follow, I began to struggle.

An early issue was that when asked a question like ‘How do I hire my first Engineer?’, AiLex would immediately launch into multi-paragraph answers with 10-step instructions.

This might seem on the surface like what you would want, but this is absolutely not how I would approach a question like this from a founder. Firstly, we need to recognise the fact that the person who’s asking is human. That means they are driven as much by their emotional drives as their practical, rational ones. So our first step is to understand them, and we can do this by asking questions and getting insight into the context in which they are operating.

It depends

There’s an old cliché in engineering that the answer to any technical question is always ‘it depends’. Like any stereotype, there’s a grain of truth in it. Good engineering requires an understanding of the why behind the request. This is just as important, if not more so, than what the request is. With software, we can create pretty much anything we can imagine. But constraints such as time, budget, and access to talent and skillsets of engineers, will always exist. And for some reason they don’t, you’ll probably be doing something where the constraint then becomes the laws of physics. All this to say, we never have a blank canvas. We’re always working within a context that limits us in some way. The art of engineering, then, is to work with the inevitable constraints which are present. We aim to arrive at a solution that maximises our limited resources as elegantly as possible and allows us to take a step forward.

Which is why being met immediately with a 10-point plan for hiring an engineer felt (to me) so jarring. Where was the context? Why did this person want to hire an engineer at this stage? How were they feeling about this? Excited? Apprehensive? Depending on the answers to these questions, very different approaches could be taken. Having a clear idea of what you want to build and being excited to hire your first engineer to build it is quite different to feeling completely lost and blocked about technical direction and feeling like you need to hire someone to make any progress.

To reflect this, AiLex needed to change. But wrangling the GPT to avoid jumping straight into ‘solutionizing’ proved trickier than anticipated. For example, simply saying ‘be conversational’ or ‘ask why questions’ didn’t have any effect. I even tried asking the GPT to role-play as a user while I pretended to be AiLex, but even after a few back-and-forth exchanges, it would still return that 10-point plan.

After a while, I gave up on the Create interface and went back over to the Configure section. This is where I started to make some headway. By typing directly into the configuration instructions, it became easier and quicker to adjust the prompts. Adding new instructions, deleting others, moving things around and then immediately seeing aLi update in the Preview screen without needing to wait for the ChatGPT Create bot to write its answer to me - I had simplified my iteration process.

This allowed me to get faster feedback and learn what worked and didn’t work faster, I found that I needed to be very explicit about instructions. Inputting

ask “why” questions

didn’t have the desired effect, but

“before you offer any answer, YOU MUST ask a question”

did. It also seemed that the earlier on in the context an instruction came, the more weight it was given. Doing all caps seemed to have a bit of an effect, sometimes. By tightening the feedback loop between my input and the custom GPT output, I began to see quicker progress. (Tight feedback loops are vital for building products, whether at the code, team, organisation or customer level. For more on the value of shortening feedback loops and other invaluable design insights, check out Kent Beck’s work).

After a bunch of trial and error, it started to respond in a way that was easier to interact with. I role-played as a potential founder and asked a bunch of questions, and was pleasantly surprised to find I had some interesting conversations. Once or twice, AiLex asked a question or made a suggestion that genuinely made me pause and think about something differently. At one point, it asked whether I preferred to hire an engineer who had more industry knowledge or a broader skillset. I replied that I wasn’t sure. It then recommended that I write a pros and cons list for each approach. This is a pretty obvious piece of advice, but that was something that felt useful at that moment as a (pretend) founder which hadn’t crossed my mind and may well have helped unblock me if I felt stuck on a decision. Sometimes we just need reminding of the obvious, to remember we had the answer all along. In this sense, AiLex helped me appreciate that supporting founders is as much about holding space for them as it is simply providing technical expertise.

In the end, I believe a lot of the value that AiLex provides isn’t all that different from the ELIZA program way back in the 60s. This was probably the first ChatBot ever invented, and basically just mirrored our questions back at us. Despite its simplicity, and even when you know what it’s doing, it can still feel like we have a connection with it. We feel heard and are encouraged to open up and articulate our thinking, which can be all it takes to think about a problem slightly differently or find the confidence to make a decision.

Of course, the potential of ChatGPT is much greater, and there are some undeniably useful and time-saving things it can do. For example, AiLex was able to generate a job spec for my first engineer hire, incorporating all the relevant context and preferences covered in our conversation. I guess the key question boils down to: is AiLex a better experience to interact with than ‘vanilla’ ChatGPT? I think so, but if you have a ChatGPT account give it a try for yourself and tell me what you think:

Takeaways

For me, the most valuable part of the exercise was actually the role-playing as a founder. It gave me a deeper appreciation for the kind of challenges they might be facing. Qualities such as being comfortable with uncertainty, being collaborative and non-judgemental when it comes to discussing technical approaches, and sharing the ambition and passion it takes to launch a start-up quickly became obvious to me. These are qualities I know I embody but which I maybe haven’t put front and centre when I talk about myself, so that was a really helpful learning I will be taking with me.

So, let’s circle back. Do I think that AiLex will be able to replace me anytime soon? The answer is no. The experience and ideas I carry with me into my work can’t be embedded into a Custom GPT so easily. But these are early days, and I’ve been playing around in the kiddy pool when it comes to customising a Large Language Model (LLM). The next step would be to enrich a model via a process called RAG (Retrieval-Augment Generation), seeing what happens when I feed it the eclectic array of key texts I reference and draw from in my work3. But do I think AiLex could help a founder in some small way? I’d like to believe so, and even if not, the lessons I’ve learned along the way have been both interesting and valuable.

On that note, I will wrap up this post. Please do give AiLex a go and see what you think, I’d love to hear any feedback and things that obviously (or not so obviously) seem weird or could be improved. If you enjoyed this read please do consider subscribing, and if you need any human support on the technical side of things reach out on LinkedIn.

If you want a more comprehensive definition of what a Fractional CTO is and does, check out this comprehensive discussion

This didn’t come out of nowhere. I’d recently come across the concept of The Four Futures, and indeed used its own custom GPT to help me think about my role (here you can see what it made of my job prospects).

What happens when you blend together Non-Violent Communication, the Tao Te Ching, Poor Charlie’s Almanac and Kent Beck’s Tidy First? I’ll let you know if a future post, dear reader

A fascinating, clear, and focused article. As a non tech person, I learned a lot, and it did indeed cut through the miasma of rubbish surrounding AI.